Process

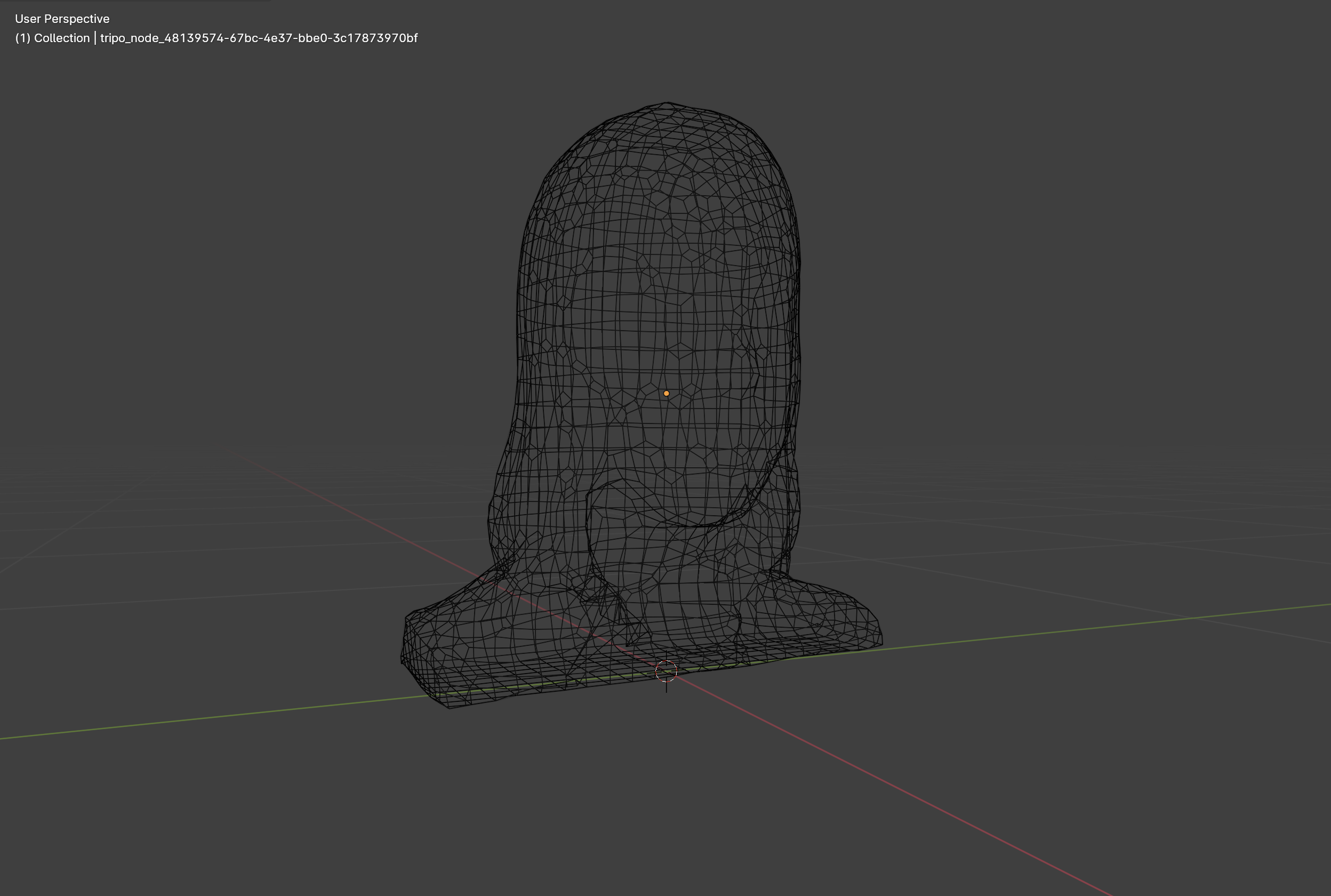

Image to 3D Asset

I used AI to create a simple 3D mesh from a portrait photograph.

The 3D asset is then used for a wireframe/turntable animation.

Face morphs

With a portrait photograph as starting point, I generated several images through Stable Diffusion, each with slightly tweaked features. The features can be fine-tuned to achieve different results, for example more child-like or more masculine traits.

I then animated clips morphing between them.

Videogame-style characters

For this section of the film I generated several visuals of virtual characters, both as portraits and in an office setting. These last ones are animated using image-to-video tools.

Stable Diffusion + ControlNet + Style Transfer

These clips were created with a workflow based on a depth map and style references. The style reference is a cross-fade between a normal portrait and stylised images (a glass flower and a fish-woman).

Realtime image generation

For the portrait sequence towards the end of the film I used the realtime feature from krea. This allows to generate images based on a text prompt and a visual input, like basic drawings or screen captures.